|

| void | detect (InputArray frame, std::vector< std::vector< Point > > &detections, std::vector< float > &confidences) const |

| | Performs detection. More...

|

| |

| void | detect (InputArray frame, std::vector< std::vector< Point > > &detections) const |

| |

| void | detectTextRectangles (InputArray frame, std::vector< cv::RotatedRect > &detections, std::vector< float > &confidences) const |

| | Performs detection. More...

|

| |

| void | detectTextRectangles (InputArray frame, std::vector< cv::RotatedRect > &detections) const |

| |

| | Model () |

| |

| | Model (const Model &)=default |

| |

| | Model (Model &&)=default |

| |

| | Model (const String &model, const String &config="") |

| | Create model from deep learning network represented in one of the supported formats. An order of model and config arguments does not matter. More...

|

| |

| | Model (const Net &network) |

| | Create model from deep learning network. More...

|

| |

| Impl * | getImpl () const |

| |

| Impl & | getImplRef () const |

| |

| Net & | getNetwork_ () const |

| |

| Net & | getNetwork_ () |

| |

| | operator Net & () const |

| |

| Model & | operator= (const Model &)=default |

| |

| Model & | operator= (Model &&)=default |

| |

| void | predict (InputArray frame, OutputArrayOfArrays outs) const |

| | Given the input frame, create input blob, run net and return the output blobs. More...

|

| |

| Model & | setInputCrop (bool crop) |

| | Set flag crop for frame. More...

|

| |

| Model & | setInputMean (const Scalar &mean) |

| | Set mean value for frame. More...

|

| |

| void | setInputParams (double scale=1.0, const Size &size=Size(), const Scalar &mean=Scalar(), bool swapRB=false, bool crop=false) |

| | Set preprocessing parameters for frame. More...

|

| |

| Model & | setInputScale (double scale) |

| | Set scalefactor value for frame. More...

|

| |

| Model & | setInputSize (const Size &size) |

| | Set input size for frame. More...

|

| |

| Model & | setInputSize (int width, int height) |

| |

| Model & | setInputSwapRB (bool swapRB) |

| | Set flag swapRB for frame. More...

|

| |

| Model & | setPreferableBackend (dnn::Backend backendId) |

| |

| Model & | setPreferableTarget (dnn::Target targetId) |

| |

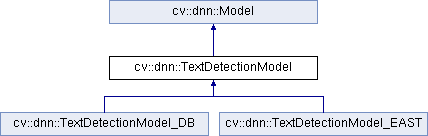

Base class for text detection networks.

| void cv::dnn::TextDetectionModel::detect |

( |

InputArray |

frame, |

|

|

std::vector< std::vector< Point > > & |

detections, |

|

|

std::vector< float > & |

confidences |

|

) |

| const |

| Python: |

|---|

| cv.dnn_TextDetectionModel.detect( | frame | ) -> | detections, confidences |

| cv.dnn_TextDetectionModel.detect( | frame | ) -> | detections |

Performs detection.

Given the input frame, prepare network input, run network inference, post-process network output and return result detections.

Each result is quadrangle's 4 points in this order:

- bottom-left

- top-left

- top-right

- bottom-right

Use cv::getPerspectiveTransform function to retrive image region without perspective transformations.

- Note

- If DL model doesn't support that kind of output then result may be derived from detectTextRectangles() output.

- Parameters

-

| [in] | frame | The input image |

| [out] | detections | array with detections' quadrangles (4 points per result) |

| [out] | confidences | array with detection confidences |

Public Member Functions inherited from cv::dnn::Model

Public Member Functions inherited from cv::dnn::Model Protected Attributes inherited from cv::dnn::Model

Protected Attributes inherited from cv::dnn::Model 1.8.13

1.8.13