|

OpenCV

Open Source Computer Vision

|

|

OpenCV

Open Source Computer Vision

|

Classes | |

| class | cv::ml::ANN_MLP |

| Artificial Neural Networks - Multi-Layer Perceptrons. More... | |

| class | cv::ml::Boost |

| Boosted tree classifier derived from DTrees. More... | |

| class | cv::ml::DTrees |

| The class represents a single decision tree or a collection of decision trees. More... | |

| class | cv::ml::EM |

| The class implements the Expectation Maximization algorithm. More... | |

| class | cv::ml::SVM::Kernel |

| class | cv::ml::KNearest |

| The class implements K-Nearest Neighbors model. More... | |

| class | cv::ml::LogisticRegression |

| Implements Logistic Regression classifier. More... | |

| class | cv::ml::DTrees::Node |

| The class represents a decision tree node. More... | |

| class | cv::ml::NormalBayesClassifier |

| Bayes classifier for normally distributed data. More... | |

| class | cv::ml::ParamGrid |

| The structure represents the logarithmic grid range of statmodel parameters. More... | |

| class | cv::ml::RTrees |

| The class implements the random forest predictor. More... | |

| class | cv::ml::DTrees::Split |

| The class represents split in a decision tree. More... | |

| class | cv::ml::StatModel |

| Base class for statistical models in OpenCV ML. More... | |

| class | cv::ml::SVM |

| Support Vector Machines. More... | |

| class | cv::ml::TrainData |

| Class encapsulating training data. More... | |

Functions | |

| void | cv::ml::createConcentricSpheresTestSet (int nsamples, int nfeatures, int nclasses, OutputArray samples, OutputArray responses) |

| Creates test set. More... | |

| void | cv::ml::randMVNormal (InputArray mean, InputArray cov, int nsamples, OutputArray samples) |

| Generates sample from multivariate normal distribution. More... | |

The Machine Learning Library (MLL) is a set of classes and functions for statistical classification, regression, and clustering of data.

Most of the classification and regression algorithms are implemented as C++ classes. As the algorithms have different sets of features (like an ability to handle missing measurements or categorical input variables), there is a little common ground between the classes. This common ground is defined by the class cv::ml::StatModel that all the other ML classes are derived from.

See detailed overview here: Machine Learning Overview.

possible activation functions

| enum cv::ml::ErrorTypes |

SVM kernel type

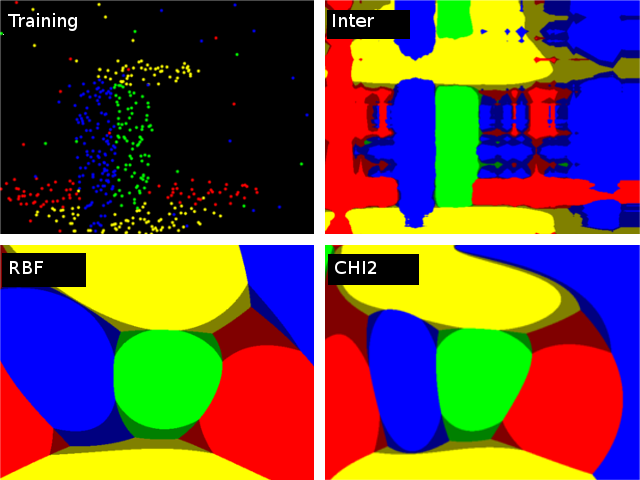

A comparison of different kernels on the following 2D test case with four classes. Four SVM::C_SVC SVMs have been trained (one against rest) with auto_train. Evaluation on three different kernels (SVM::CHI2, SVM::INTER, SVM::RBF). The color depicts the class with max score. Bright means max-score > 0, dark means max-score < 0.

| Enumerator | |

|---|---|

| CUSTOM |

Returned by SVM::getKernelType in case when custom kernel has been set |

| LINEAR |

Linear kernel. No mapping is done, linear discrimination (or regression) is done in the original feature space. It is the fastest option. K(x_i, x_j) = x_i^T x_j. |

| POLY |

Polynomial kernel: K(x_i, x_j) = (\gamma x_i^T x_j + coef0)^{degree}, \gamma > 0. |

| RBF |

Radial basis function (RBF), a good choice in most cases. K(x_i, x_j) = e^{-\gamma ||x_i - x_j||^2}, \gamma > 0. |

| SIGMOID |

Sigmoid kernel: K(x_i, x_j) = \tanh(\gamma x_i^T x_j + coef0). |

| CHI2 |

Exponential Chi2 kernel, similar to the RBF kernel: K(x_i, x_j) = e^{-\gamma \chi^2(x_i,x_j)}, \chi^2(x_i,x_j) = (x_i-x_j)^2/(x_i+x_j), \gamma > 0. |

| INTER |

Histogram intersection kernel. A fast kernel. K(x_i, x_j) = min(x_i,x_j). |

| enum cv::ml::SampleTypes |

Train options

Available training methods

| Enumerator | |

|---|---|

| BACKPROP |

The back-propagation algorithm. |

| RPROP |

The RPROP algorithm. See [118] for details. |

Implementations of KNearest algorithm.

| Enumerator | |

|---|---|

| BRUTE_FORCE | |

| KDTREE | |

| enum cv::ml::SVM::Types |

SVM type

| Enumerator | |

|---|---|

| C_SVC |

C-Support Vector Classification. n-class classification (n \geq 2), allows imperfect separation of classes with penalty multiplier C for outliers. |

| NU_SVC |

\nu-Support Vector Classification. n-class classification with possible imperfect separation. Parameter \nu (in the range 0..1, the larger the value, the smoother the decision boundary) is used instead of C. |

| ONE_CLASS |

Distribution Estimation (One-class SVM). All the training data are from the same class, SVM builds a boundary that separates the class from the rest of the feature space. |

| EPS_SVR |

\epsilon-Support Vector Regression. The distance between feature vectors from the training set and the fitting hyper-plane must be less than p. For outliers the penalty multiplier C is used. |

| NU_SVR |

\nu-Support Vector Regression. \nu is used instead of p. See [26] for details. |

| enum cv::ml::EM::Types |

Type of covariation matrices.

| Enumerator | |

|---|---|

| COV_MAT_SPHERICAL |

A scaled identity matrix \mu_k * I. There is the only parameter \mu_k to be estimated for each matrix. The option may be used in special cases, when the constraint is relevant, or as a first step in the optimization (for example in case when the data is preprocessed with PCA). The results of such preliminary estimation may be passed again to the optimization procedure, this time with covMatType=EM::COV_MAT_DIAGONAL. |

| COV_MAT_DIAGONAL |

A diagonal matrix with positive diagonal elements. The number of free parameters is d for each matrix. This is most commonly used option yielding good estimation results. |

| COV_MAT_GENERIC |

A symmetric positively defined matrix. The number of free parameters in each matrix is about d^2/2. It is not recommended to use this option, unless there is pretty accurate initial estimation of the parameters and/or a huge number of training samples. |

| COV_MAT_DEFAULT | |

| enum cv::ml::Boost::Types |

Boosting type. Gentle AdaBoost and Real AdaBoost are often the preferable choices.

| void cv::ml::createConcentricSpheresTestSet | ( | int | nsamples, |

| int | nfeatures, | ||

| int | nclasses, | ||

| OutputArray | samples, | ||

| OutputArray | responses | ||

| ) |

Creates test set.

| void cv::ml::randMVNormal | ( | InputArray | mean, |

| InputArray | cov, | ||

| int | nsamples, | ||

| OutputArray | samples | ||

| ) |

Generates sample from multivariate normal distribution.

| mean | an average row vector |

| cov | symmetric covariation matrix |

| nsamples | returned samples count |

| samples | returned samples array |

1.8.9.1

1.8.9.1