Prev Tutorial: Meanshift and Camshift

Next Tutorial: Cascade Classifier

Goal

In this chapter,

- We will understand the concepts of optical flow and its estimation using Lucas-Kanade method.

- We will use functions like cv.calcOpticalFlowPyrLK() to track feature points in a video.

- We will create a dense optical flow field using the cv.calcOpticalFlowFarneback() method.

Optical Flow

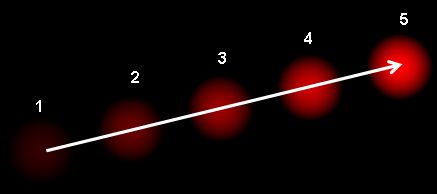

Optical flow is the pattern of apparent motion of image objects between two consecutive frames caused by the movement of object or camera. It is 2D vector field where each vector is a displacement vector showing the movement of points from first frame to second. Consider the image below (Image Courtesy: Wikipedia article on Optical Flow).

image

It shows a ball moving in 5 consecutive frames. The arrow shows its displacement vector. Optical flow has many applications in areas like :

- Structure from Motion

- Video Compression

- Video Stabilization ...

Optical flow works on several assumptions:

- The pixel intensities of an object do not change between consecutive frames.

- Neighbouring pixels have similar motion.

Consider a pixel \(I(x,y,t)\) in first frame (Check a new dimension, time, is added here. Earlier we were working with images only, so no need of time). It moves by distance \((dx,dy)\) in next frame taken after \(dt\) time. So since those pixels are the same and intensity does not change, we can say,

\[I(x,y,t) = I(x+dx, y+dy, t+dt)\]

Then take taylor series approximation of right-hand side, remove common terms and divide by \(dt\) to get the following equation:

\[f_x u + f_y v + f_t = 0 \;\]

where:

\[f_x = \frac{\partial f}{\partial x} \; ; \; f_y = \frac{\partial f}{\partial y}\]

\[u = \frac{dx}{dt} \; ; \; v = \frac{dy}{dt}\]

Above equation is called Optical Flow equation. In it, we can find \(f_x\) and \(f_y\), they are image gradients. Similarly \(f_t\) is the gradient along time. But \((u,v)\) is unknown. We cannot solve this one equation with two unknown variables. So several methods are provided to solve this problem and one of them is Lucas-Kanade.

Lucas-Kanade method

We have seen an assumption before, that all the neighbouring pixels will have similar motion. Lucas-Kanade method takes a 3x3 patch around the point. So all the 9 points have the same motion. We can find \((f_x, f_y, f_t)\) for these 9 points. So now our problem becomes solving 9 equations with two unknown variables which is over-determined. A better solution is obtained with least square fit method. Below is the final solution which is two equation-two unknown problem and solve to get the solution.

\[\begin{bmatrix} u \\ v \end{bmatrix} = \begin{bmatrix} \sum_{i}{f_{x_i}}^2 & \sum_{i}{f_{x_i} f_{y_i} } \\ \sum_{i}{f_{x_i} f_{y_i}} & \sum_{i}{f_{y_i}}^2 \end{bmatrix}^{-1} \begin{bmatrix} - \sum_{i}{f_{x_i} f_{t_i}} \\ - \sum_{i}{f_{y_i} f_{t_i}} \end{bmatrix}\]

( Check similarity of inverse matrix with Harris corner detector. It denotes that corners are better points to be tracked.)

So from the user point of view, the idea is simple, we give some points to track, we receive the optical flow vectors of those points. But again there are some problems. Until now, we were dealing with small motions, so it fails when there is a large motion. To deal with this we use pyramids. When we go up in the pyramid, small motions are removed and large motions become small motions. So by applying Lucas-Kanade there, we get optical flow along with the scale.

Lucas-Kanade Optical Flow in OpenCV

OpenCV provides all these in a single function, cv.calcOpticalFlowPyrLK(). Here, we create a simple application which tracks some points in a video. To decide the points, we use cv.goodFeaturesToTrack(). We take the first frame, detect some Shi-Tomasi corner points in it, then we iteratively track those points using Lucas-Kanade optical flow. For the function cv.calcOpticalFlowPyrLK() we pass the previous frame, previous points and next frame. It returns next points along with some status numbers which has a value of 1 if next point is found, else zero. We iteratively pass these next points as previous points in next step. See the code below:

C++

- Downloadable code: Click here

- Code at glance:

#include <iostream>

using namespace std;

int main(int argc, char **argv)

{

const string about =

"This sample demonstrates Lucas-Kanade Optical Flow calculation.\n"

"The example file can be downloaded from:\n"

" https://www.bogotobogo.com/python/OpenCV_Python/images/mean_shift_tracking/slow_traffic_small.mp4";

const string keys =

"{ h help | | print this help message }"

"{ @image | vtest.avi | path to image file }";

parser.about(about);

if (parser.has("help"))

{

parser.printMessage();

return 0;

}

if (!parser.check())

{

parser.printErrors();

return 0;

}

if (!capture.isOpened()){

cerr << "Unable to open file!" << endl;

return 0;

}

vector<Scalar> colors;

for(int i = 0; i < 100; i++)

{

colors.push_back(

Scalar(r,g,b));

}

vector<Point2f> p0, p1;

capture >> old_frame;

while(true){

capture >> frame;

if (frame.empty())

break;

vector<uchar> status;

vector<float> err;

vector<Point2f> good_new;

for(

uint i = 0; i < p0.size(); i++)

{

if(status[i] == 1) {

good_new.push_back(p1[i]);

line(mask,p1[i], p0[i], colors[i], 2);

circle(frame, p1[i], 5, colors[i], -1);

}

}

if (keyboard == 'q' || keyboard == 27)

break;

old_gray = frame_gray.

clone();

p0 = good_new;

}

}

Python

- Downloadable code: Click here

- Code at glance:

import numpy as np

import cv2 as cv

import argparse

parser = argparse.ArgumentParser(description='This sample demonstrates Lucas-Kanade Optical Flow calculation. \

The example file can be downloaded from: \

https://www.bogotobogo.com/python/OpenCV_Python/images/mean_shift_tracking/slow_traffic_small.mp4')

parser.add_argument('image', type=str, help='path to image file')

args = parser.parse_args()

feature_params = dict( maxCorners = 100,

qualityLevel = 0.3,

minDistance = 7,

blockSize = 7 )

lk_params = dict( winSize = (15, 15),

maxLevel = 2,

criteria = (cv.TERM_CRITERIA_EPS | cv.TERM_CRITERIA_COUNT, 10, 0.03))

color = np.random.randint(0, 255, (100, 3))

ret, old_frame = cap.read()

mask = np.zeros_like(old_frame)

while(1):

ret, frame = cap.read()

if not ret:

print(

'No frames grabbed!')

break

if p1 is not None:

good_new = p1[st==1]

good_old = p0[st==1]

for i, (new, old) in enumerate(zip(good_new, good_old)):

a, b = new.ravel()

c, d = old.ravel()

mask =

cv.line(mask, (int(a), int(b)), (int(c), int(d)), color[i].tolist(), 2)

frame =

cv.circle(frame, (int(a), int(b)), 5, color[i].tolist(), -1)

if k == 27:

break

old_gray = frame_gray.copy()

p0 = good_new.reshape(-1, 1, 2)

Java

- Downloadable code: Click here

- Code at glance:

import java.util.ArrayList;

import java.util.Random;

import org.opencv.core.*;

import org.opencv.highgui.HighGui;

import org.opencv.imgproc.Imgproc;

import org.opencv.video.Video;

import org.opencv.videoio.VideoCapture;

class OptFlow {

public void run(

String[] args) {

VideoCapture capture = new VideoCapture(filename);

if (!capture.isOpened()) {

System.out.println("Unable to open this file");

System.exit(-1);

}

Random rng = new Random();

for (int i = 0 ; i < 100 ; i++) {

int r = rng.nextInt(256);

int g = rng.nextInt(256);

int b = rng.nextInt(256);

colors[i] =

new Scalar(r, g, b);

}

Mat old_frame = new Mat() , old_gray = new Mat();

MatOfPoint p0MatofPoint = new MatOfPoint();

capture.read(old_frame);

Imgproc.cvtColor(old_frame, old_gray, Imgproc.COLOR_BGR2GRAY);

Imgproc.goodFeaturesToTrack(old_gray, p0MatofPoint,100,0.3,7, new Mat(),7,false,0.04);

MatOfPoint2f p0 = new MatOfPoint2f(p0MatofPoint.toArray()) , p1 = new MatOfPoint2f();

Mat mask = Mat.

zeros(old_frame.size(), old_frame.type());

while (true) {

Mat frame = new Mat(), frame_gray = new Mat();

capture.read(frame);

if (frame.empty()) {

break;

}

Imgproc.cvtColor(frame, frame_gray, Imgproc.COLOR_BGR2GRAY);

MatOfByte status = new MatOfByte();

MatOfFloat err = new MatOfFloat();

TermCriteria criteria = new TermCriteria(TermCriteria.COUNT + TermCriteria.EPS,10,0.03);

Video.calcOpticalFlowPyrLK(old_gray, frame_gray, p0, p1, status, err,

new Size(15,15),2, criteria);

byte StatusArr[] = status.toArray();

Point p0Arr[] = p0.toArray();

Point p1Arr[] = p1.toArray();

ArrayList<Point> good_new = new ArrayList<>();

for (int i = 0; i<StatusArr.length ; i++ ) {

if (StatusArr[i] == 1) {

good_new.add(p1Arr[i]);

Imgproc.line(mask, p1Arr[i], p0Arr[i], colors[i],2);

Imgproc.circle(frame, p1Arr[i],5, colors[i],-1);

}

}

Mat img = new Mat();

Core.add(frame, mask, img);

HighGui.imshow("Frame", img);

int keyboard = HighGui.waitKey(30);

if (keyboard == 'q' || keyboard == 27) {

break;

}

old_gray = frame_gray.

clone();

Point[] good_new_arr =

new Point[good_new.size()];

good_new_arr = good_new.toArray(good_new_arr);

p0 = new MatOfPoint2f(good_new_arr);

}

System.exit(0);

}

}

public class OpticalFlowDemo {

public static void main(

String[] args) {

System.loadLibrary(Core.NATIVE_LIBRARY_NAME);

new OptFlow().run(args);

}

}

(This code doesn't check how correct are the next keypoints. So even if any feature point disappears in image, there is a chance that optical flow finds the next point which may look close to it. So actually for a robust tracking, corner points should be detected in particular intervals. OpenCV samples comes up with such a sample which finds the feature points at every 5 frames. It also run a backward-check of the optical flow points got to select only good ones. Check samples/python/lk_track.py).

See the results we got:

image

Dense Optical Flow in OpenCV

Lucas-Kanade method computes optical flow for a sparse feature set (in our example, corners detected using Shi-Tomasi algorithm). OpenCV provides another algorithm to find the dense optical flow. It computes the optical flow for all the points in the frame. It is based on Gunnar Farneback's algorithm which is explained in "Two-Frame Motion Estimation Based on Polynomial Expansion" by Gunnar Farneback in 2003.

Below sample shows how to find the dense optical flow using above algorithm. We get a 2-channel array with optical flow vectors, \((u,v)\). We find their magnitude and direction. We color code the result for better visualization. Direction corresponds to Hue value of the image. Magnitude corresponds to Value plane. See the code below:

C++

- Downloadable code: Click here

- Code at glance:

#include <iostream>

using namespace std;

int main()

{

if (!capture.isOpened()){

cerr << "Unable to open file!" << endl;

return 0;

}

capture >> frame1;

while(true){

capture >> frame2;

if (frame2.empty())

break;

cartToPolar(flow_parts[0], flow_parts[1], magnitude, angle,

true);

angle *= ((1.f / 360.f) * (180.f / 255.f));

Mat _hsv[3], hsv, hsv8, bgr;

_hsv[0] = angle;

_hsv[2] = magn_norm;

if (keyboard == 'q' || keyboard == 27)

break;

prvs = next;

}

}

Python

- Downloadable code: Click here

- Code at glance:

import numpy as np

import cv2 as cv

ret, frame1 = cap.read()

hsv = np.zeros_like(frame1)

hsv[..., 1] = 255

while(1):

ret, frame2 = cap.read()

if not ret:

print(

'No frames grabbed!')

break

hsv[..., 0] = ang*180/np.pi/2

hsv[..., 2] =

cv.normalize(mag,

None, 0, 255, cv.NORM_MINMAX)

if k == 27:

break

elif k == ord('s'):

prvs = next

Java

- Downloadable code: Click here

- Code at glance:

import java.util.ArrayList;

import org.opencv.core.*;

import org.opencv.highgui.HighGui;

import org.opencv.imgproc.Imgproc;

import org.opencv.video.Video;

import org.opencv.videoio.VideoCapture;

class OptFlowDense {

public void run(

String[] args) {

VideoCapture capture = new VideoCapture(filename);

if (!capture.isOpened()) {

System.out.println("Unable to open file!");

System.exit(-1);

}

Mat frame1 = new Mat() , prvs = new Mat();

capture.read(frame1);

Imgproc.cvtColor(frame1, prvs, Imgproc.COLOR_BGR2GRAY);

while (true) {

Mat frame2 = new Mat(), next = new Mat();

capture.read(frame2);

if (frame2.empty()) {

break;

}

Imgproc.cvtColor(frame2, next, Imgproc.COLOR_BGR2GRAY);

Mat flow =

new Mat(prvs.

size(), CvType.CV_32FC2);

Video.calcOpticalFlowFarneback(prvs, next, flow,0.5,3,15,3,5,1.2,0);

ArrayList<Mat> flow_parts = new ArrayList<>(2);

Core.split(flow, flow_parts);

Mat magnitude = new Mat(), angle = new Mat(), magn_norm = new Mat();

Core.cartToPolar(flow_parts.get(0), flow_parts.get(1),

magnitude, angle,

true);

Core.normalize(magnitude, magn_norm,0.0,1.0, Core.NORM_MINMAX);

float factor = (float) ((1.0/360.0)*(180.0/255.0));

Mat new_angle = new Mat();

Core.multiply(angle,

new Scalar(factor), new_angle);

ArrayList<Mat> _hsv = new ArrayList<>() ;

Mat hsv = new Mat(), hsv8 = new Mat(), bgr = new Mat();

_hsv.add(new_angle);

_hsv.add(Mat.ones(angle.size(), CvType.CV_32F));

_hsv.add(magn_norm);

Core.merge(_hsv, hsv);

hsv.convertTo(hsv8, CvType.CV_8U, 255.0);

Imgproc.cvtColor(hsv8, bgr, Imgproc.COLOR_HSV2BGR);

HighGui.imshow("frame2", bgr);

int keyboard = HighGui.waitKey(30);

if (keyboard == 'q' || keyboard == 27) {

break;

}

prvs = next;

}

System.exit(0);

}

}

public class OpticalFlowDenseDemo {

public static void main(

String[] args) {

System.loadLibrary(Core.NATIVE_LIBRARY_NAME);

new OptFlowDense().run(args);

}

}

See the result below:

image

1.8.13

1.8.13