What is the most common way to debug computer vision applications? Usually the answer is temporary, hacked together, custom code that must be removed from the code for release compilation.

If the program is compiled without visual debugging (see CMakeLists.txt below) the only result is some information printed to the command line. We want to demonstrate how much debugging or development functionality is added by just a few lines of cvv commands.

11 #define CVVISUAL_DEBUGMODE 21 template<

class T> std::string

toString(

const T& p_arg)

34 main(

int argc,

char** argv)

40 "{ help h usage ? | | show this message }" 41 "{ width W | 0| camera resolution width. leave at 0 to use defaults }" 42 "{ height H | 0| camera resolution height. leave at 0 to use defaults }";

45 if (parser.has(

"help")) {

46 parser.printMessage();

49 int res_w = parser.get<

int>(

"width");

50 int res_h = parser.get<

int>(

"height");

54 if (!capture.isOpened()) {

55 std::cout <<

"Could not open VideoCapture" << std::endl;

59 if (res_w>0 && res_h>0) {

60 printf(

"Setting resolution to %dx%d\n", res_w, res_h);

67 std::vector<cv::KeyPoint> prevKeypoints;

70 int maxFeatureCount = 500;

71 Ptr<ORB> detector = ORB::create(maxFeatureCount);

75 for (

int imgId = 0; imgId < 10; imgId++) {

79 printf(

"%d: image captured\n", imgId);

81 std::string imgIdString{

"imgRead"};

91 std::vector<cv::KeyPoint> keypoints;

94 printf(

"%d: detected %zd keypoints\n", imgId, keypoints.size());

97 if (!prevImgGray.

empty()) {

98 std::vector<cv::DMatch> matches;

99 matcher.match(prevDescriptors, descriptors, matches);

100 printf(

"%d: all matches size=%zd\n", imgId, matches.size());

101 std::string allMatchIdString{

"all matches "};

106 double bestRatio = 0.8;

107 std::sort(matches.begin(), matches.end());

108 matches.resize(

int(bestRatio * matches.size()));

109 printf(

"%d: best matches size=%zd\n", imgId, matches.size());

110 std::string bestMatchIdString{

"best " +

toString(bestRatio) +

" matches "};

115 prevImgGray = imgGray;

116 prevKeypoints = keypoints;

117 prevDescriptors = descriptors;

void sort(InputArray src, OutputArray dst, int flags)

Sorts each row or each column of a matrix.

convert between RGB/BGR and grayscale, color conversions

Definition: imgproc.hpp:544

Definition: videoio_c.h:170

Definition: affine.hpp:51

static void showImage(cv::InputArray img, impl::CallMetaData metaData=impl::CallMetaData(), const char *description=nullptr, const char *view=nullptr)

Add a single image to debug GUI (similar to imshow <>).

Definition: show_image.hpp:38

Designed for command line parsing.

Definition: utility.hpp:820

Class for video capturing from video files, image sequences or cameras.

Definition: videoio.hpp:616

void cvtColor(InputArray src, OutputArray dst, int code, int dstCn=0)

Converts an image from one color space to another.

Template class for specifying the size of an image or rectangle.

Definition: types.hpp:315

void finalShow()

Passes the control to the debug-window for a last time.

Definition: final_show.hpp:23

static std::string toString(const MatShape &shape, const String &name="")

Definition: shape_utils.hpp:187

Brute-force descriptor matcher.

Definition: features2d.hpp:1086

InputOutputArray noArray()

static void debugFilter(cv::InputArray original, cv::InputArray result, impl::CallMetaData metaData=impl::CallMetaData(), const char *description=nullptr, const char *view=nullptr)

Use the debug-framework to compare two images (from which the second is intended to be the result of ...

Definition: filter.hpp:36

static void debugDMatch(cv::InputArray img1, std::vector< cv::KeyPoint > keypoints1, cv::InputArray img2, std::vector< cv::KeyPoint > keypoints2, std::vector< cv::DMatch > matches, const impl::CallMetaData &data, const char *description=nullptr, const char *view=nullptr, bool useTrainDescriptor=true)

Add a filled in DMatch <dmatch> to debug GUI.

Definition: dmatch.hpp:49

Definition: videoio_c.h:169

Template class for smart pointers with shared ownership.

Definition: cvstd.hpp:261

virtual void detectAndCompute(InputArray image, InputArray mask, std::vector< KeyPoint > &keypoints, OutputArray descriptors, bool useProvidedKeypoints=false)

n-dimensional dense array class

Definition: mat.hpp:804

bool empty() const

Returns true if the array has no elements.

cmake_minimum_required(VERSION 2.8)

project(cvvisual_test)

SET(CMAKE_PREFIX_PATH ~/software/opencv/install)

SET(CMAKE_CXX_COMPILER "g++-4.8")

SET(CMAKE_CXX_FLAGS "-std=c++11 -O2 -pthread -Wall -Werror")

# (un)set: cmake -DCVV_DEBUG_MODE=OFF ..

OPTION(CVV_DEBUG_MODE "cvvisual-debug-mode" ON)

if(CVV_DEBUG_MODE MATCHES ON)

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -DCVVISUAL_DEBUGMODE")

endif()

FIND_PACKAGE(OpenCV REQUIRED)

include_directories(${OpenCV_INCLUDE_DIRS})

add_executable(cvvt main.cpp)

target_link_libraries(cvvt

opencv_core opencv_videoio opencv_imgproc opencv_features2d

opencv_cvv

)

- We compile the program either using the above CmakeLists.txt with Option CVV_DEBUG_MODE=ON (cmake -DCVV_DEBUG_MODE=ON) or by adding the corresponding define CVVISUAL_DEBUGMODE to our compiler (e.g. g++ -DCVVISUAL_DEBUGMODE).

The first cvv call simply shows the image (similar to imshow) with the imgIdString as comment.

The image is added to the overview tab in the visual debug GUI and the cvv call blocks.

image

The image can then be selected and viewed

image

Whenever you want to continue in the code, i.e. unblock the cvv call, you can either continue until the next cvv call (Step), continue until the last cvv call (*>>*) or run the application until it exists (Close).

We decide to press the green Step button.

The next cvv calls are used to debug all kinds of filter operations, i.e. operations that take a picture as input and return a picture as output.

As with every cvv call, you first end up in the overview.

image

We decide not to care about the conversion to gray scale and press Step.

If you open the filter call, you will end up in the so called "DefaultFilterView". Both images are shown next to each other and you can (synchronized) zoom into them.

image

When you go to very high zoom levels, each pixel is annotated with its numeric values.

image

We press Step twice and have a look at the dilated image.

The DefaultFilterView showing both images

image

Now we use the View selector in the top right and select the "DualFilterView". We select "Changed Pixels" as filter and apply it (middle image).

image

After we had a close look at these images, perhaps using different views, filters or other GUI features, we decide to let the program run through. Therefore we press the yellow *>>* button.

The program will block at

and display the overview with everything that was passed to cvv in the meantime.

image

The cvv debugDMatch call is used in a situation where there are two images each with a set of descriptors that are matched to each other.

We pass both images, both sets of keypoints and their matching to the visual debug module.

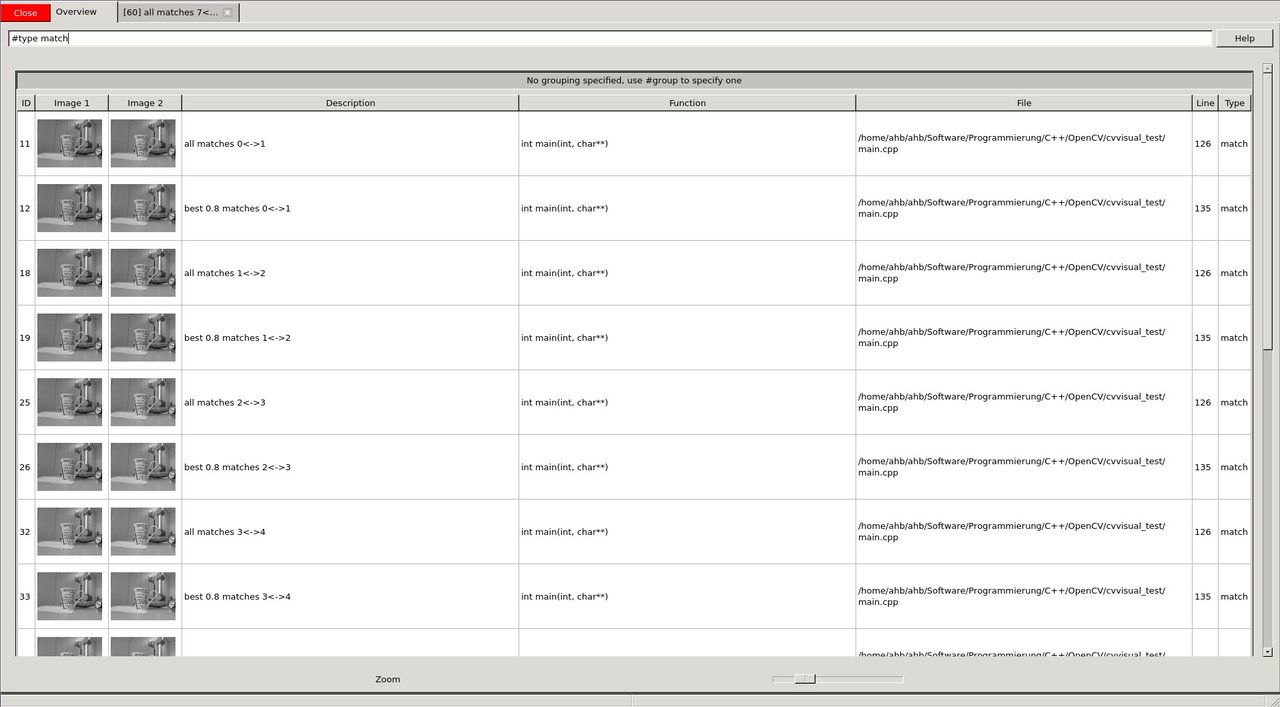

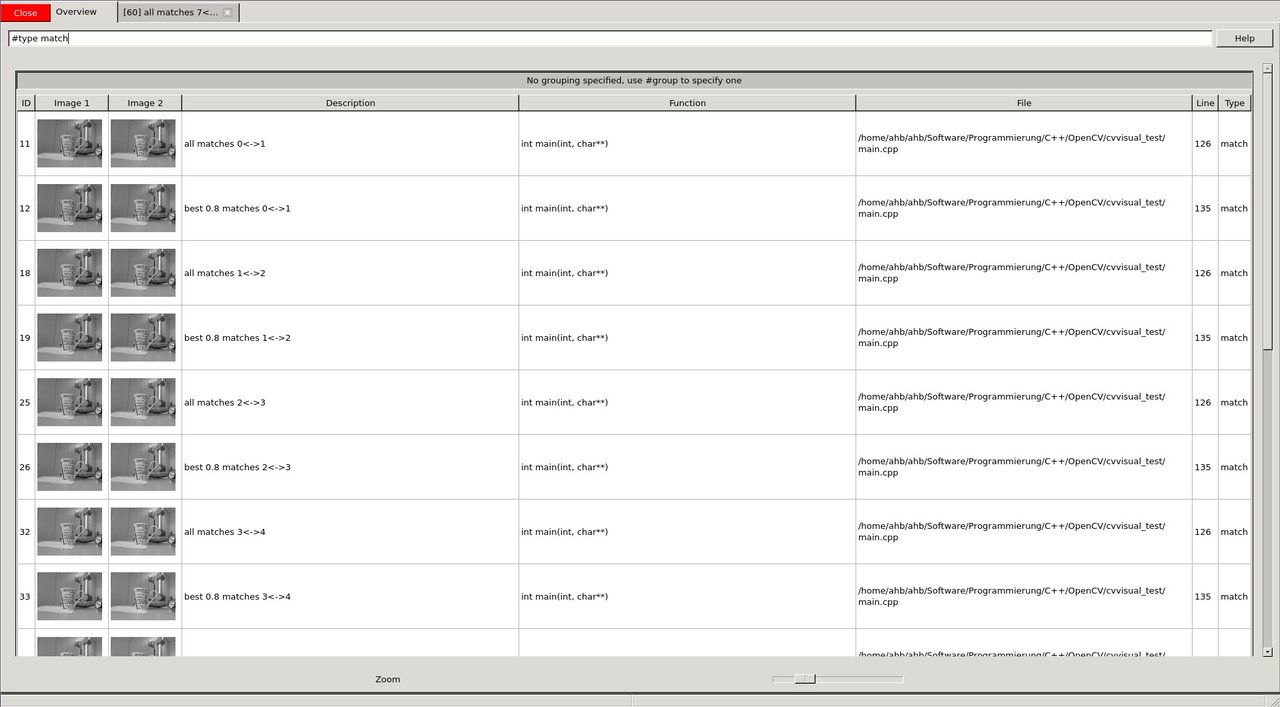

Since we want to have a look at matches, we use the filter capabilities (*#type match*) in the overview to only show match calls.

image

We want to have a closer look at one of them, e.g. to tune our parameters that use the matching. The view has various settings how to display keypoints and matches. Furthermore, there is a mouseover tooltip.

image

We see (visual debugging!) that there are many bad matches. We decide that only 70% of the matches should be shown - those 70% with the lowest match distance.

image

Having successfully reduced the visual distraction, we want to see more clearly what changed between the two images. We select the "TranslationMatchView" that shows to where the keypoint was matched in a different way.

image

It is easy to see that the cup was moved to the left during the two images.

Although, cvv is all about interactively seeing the computer vision bugs, this is complemented by a "RawView" that allows to have a look at the underlying numeric data.

image

There are many more useful features contained in the cvv GUI. For instance, one can group the overview tab.

image

Result

1.8.13

1.8.13