To do the tracking we need a video and object position on the first frame.

To run the code you have to specify input and output video path and object bounding box.

3 #include <opencv2/opencv.hpp>

14 const double akaze_thresh = 3e-4;

15 const double ransac_thresh = 2.5f;

16 const double nn_match_ratio = 0.8f;

17 const int bb_min_inliers = 100;

18 const int stats_update_period = 10;

28 void setFirstFrame(

const Mat frame, vector<Point2f> bb,

string title, Stats& stats);

29 Mat process(

const Mat frame, Stats& stats);

36 Mat first_frame, first_desc;

37 vector<KeyPoint> first_kp;

38 vector<Point2f> object_bb;

41 void Tracker::setFirstFrame(

const Mat frame, vector<Point2f> bb,

string title, Stats& stats)

43 first_frame = frame.

clone();

45 stats.keypoints = (int)first_kp.size();

46 drawBoundingBox(first_frame, bb);

51 Mat Tracker::process(

const Mat frame, Stats& stats)

56 stats.keypoints = (int)kp.size();

58 vector< vector<DMatch> > matches;

59 vector<KeyPoint> matched1, matched2;

60 matcher->knnMatch(first_desc, desc, matches, 2);

61 for(

unsigned i = 0; i < matches.size(); i++) {

62 if(matches[i][0].distance < nn_match_ratio * matches[i][1].distance) {

63 matched1.push_back(first_kp[matches[i][0].queryIdx]);

64 matched2.push_back( kp[matches[i][0].trainIdx]);

67 stats.matches = (int)matched1.size();

69 Mat inlier_mask, homography;

70 vector<KeyPoint> inliers1, inliers2;

71 vector<DMatch> inlier_matches;

72 if(matched1.size() >= 4) {

74 RANSAC, ransac_thresh, inlier_mask);

77 if(matched1.size() < 4 || homography.

empty()) {

79 hconcat(first_frame, frame, res);

84 for(

unsigned i = 0; i < matched1.size(); i++) {

86 int new_i =

static_cast<int>(inliers1.size());

87 inliers1.push_back(matched1[i]);

88 inliers2.push_back(matched2[i]);

89 inlier_matches.push_back(

DMatch(new_i, new_i, 0));

92 stats.inliers = (int)inliers1.size();

93 stats.ratio = stats.inliers * 1.0 / stats.matches;

95 vector<Point2f> new_bb;

98 if(stats.inliers >= bb_min_inliers) {

99 drawBoundingBox(frame_with_bb, new_bb);

102 drawMatches(first_frame, inliers1, frame_with_bb, inliers2,

108 int main(

int argc,

char **argv)

111 cerr <<

"Usage: " << endl <<

112 "akaze_track input_path output_path bounding_box" << endl;

122 if(!video_in.isOpened()) {

123 cerr <<

"Couldn't open " << argv[1] << endl;

126 if(!video_out.isOpened()) {

127 cerr <<

"Couldn't open " << argv[2] << endl;

133 if(fs[

"bounding_box"].empty()) {

134 cerr <<

"Couldn't read bounding_box from " << argv[3] << endl;

137 fs[

"bounding_box"] >> bb;

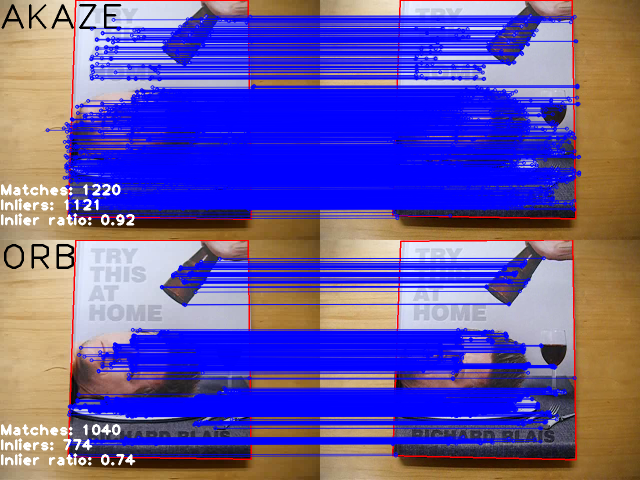

139 Stats stats, akaze_stats, orb_stats;

145 Tracker akaze_tracker(akaze, matcher);

146 Tracker orb_tracker(orb, matcher);

150 akaze_tracker.setFirstFrame(frame, bb,

"AKAZE", stats);

151 orb_tracker.setFirstFrame(frame, bb,

"ORB", stats);

153 Stats akaze_draw_stats, orb_draw_stats;

155 Mat akaze_res, orb_res, res_frame;

156 for(

int i = 1; i < frame_count; i++) {

157 bool update_stats = (i % stats_update_period == 0);

160 akaze_res = akaze_tracker.process(frame, stats);

161 akaze_stats += stats;

163 akaze_draw_stats = stats;

167 orb_res = orb_tracker.process(frame, stats);

170 orb_draw_stats = stats;

173 drawStatistics(akaze_res, akaze_draw_stats);

174 drawStatistics(orb_res, orb_draw_stats);

175 vconcat(akaze_res, orb_res, res_frame);

176 video_out << res_frame;

177 cout << i <<

"/" << frame_count - 1 << endl;

179 akaze_stats /= frame_count - 1;

180 orb_stats /= frame_count - 1;

181 printStatistics(

"AKAZE", akaze_stats);

182 printStatistics(

"ORB", orb_stats);

bool empty() const

Returns true if the array has no elements.

Scalar_< double > Scalar

Definition: types.hpp:597

Definition: videoio.hpp:100

void drawMatches(InputArray img1, const std::vector< KeyPoint > &keypoints1, InputArray img2, const std::vector< KeyPoint > &keypoints2, const std::vector< DMatch > &matches1to2, InputOutputArray outImg, const Scalar &matchColor=Scalar::all(-1), const Scalar &singlePointColor=Scalar::all(-1), const std::vector< char > &matchesMask=std::vector< char >(), int flags=DrawMatchesFlags::DEFAULT)

Draws the found matches of keypoints from two images.

Video writer class.

Definition: videoio.hpp:598

Definition: videoio.hpp:99

Base abstract class for the long-term tracker:

Definition: tracker.hpp:529

Class for video capturing from video files, image sequences or cameras. The class provides C++ API fo...

Definition: videoio.hpp:429

virtual void setMaxFeatures(int maxFeatures)=0

void vconcat(const Mat *src, size_t nsrc, OutputArray dst)

Applies vertical concatenation to given matrices.

Definition: videoio.hpp:97

InputOutputArray noArray()

Mat clone() const

Creates a full copy of the array and the underlying data.

RANSAC algorithm.

Definition: calib3d.hpp:187

XML/YAML file storage class that encapsulates all the information necessary for writing or reading da...

Definition: persistence.hpp:298

unsigned char uchar

Definition: defs.h:284

Template class for smart pointers with shared ownership.

Definition: cvstd.hpp:283

Size2i Size

Definition: types.hpp:308

Definition: videoio.hpp:101

virtual void detectAndCompute(InputArray image, InputArray mask, std::vector< KeyPoint > &keypoints, OutputArray descriptors, bool useProvidedKeypoints=false)

virtual void setThreshold(double threshold)=0

Mat findHomography(InputArray srcPoints, InputArray dstPoints, int method=0, double ransacReprojThreshold=3, OutputArray mask=noArray(), const int maxIters=2000, const double confidence=0.995)

Finds a perspective transformation between two planes.

Definition: videoio.hpp:98

void hconcat(const Mat *src, size_t nsrc, OutputArray dst)

Applies horizontal concatenation to given matrices.

void putText(InputOutputArray img, const String &text, Point org, int fontFace, double fontScale, Scalar color, int thickness=1, int lineType=LINE_8, bool bottomLeftOrigin=false)

Draws a text string.

small size sans-serif font

Definition: core.hpp:214

int main(int argc, const char *argv[])

Definition: facerec_demo.cpp:67

n-dimensional dense array class

Definition: mat.hpp:726

_Tp & at(int i0=0)

Returns a reference to the specified array element.

Class for matching keypoint descriptors.

Definition: types.hpp:728

Point2i Point

Definition: types.hpp:181

void perspectiveTransform(InputArray src, OutputArray dst, InputArray m)

Performs the perspective matrix transformation of vectors.

This class implements algorithm described abobve using given feature detector and descriptor matcher.

1.8.7

1.8.7